Using n-best Lists

Introduction

This chapter describes two of the most important tools in a Voice User Interface (VUI) designer’s toolbox – the N-best list and the skip-list (Huang, 2001; Ostendorf et al., 1991). The concepts are defined, and their combined usage illustrated and explored. Almost every reasonably complex application could, and should, use them.

Consider the following interaction between an automated system and a caller:

- System: Please say your PIN.

- Caller: Five, five, five, five.

- System: OK, I heard ‘five, five, five, nine.’ Is that right?

- Caller: No.

- System: My mistake. Let’s try that again. Please say your PIN.

- Caller: Five five five five.

- System: OK, I heard ‘five five five nine.’ Is that right?

- Caller: No, you idiot.

What went wrong? The caller told the system that their PIN was NOT 5559 the first time around, yet the second time around, the system persisted in its mistake.

The Skip-List

A simple way to remedy this situation is for the application to maintain a data structure called a skip-list. When the user tells the system (line 4) that 5559 is wrong, the system puts 5559 on the skip-list. On the second recognition try (after line 6), the system looks at its recognition result, and if 5559 is there, it should be skipped over. But wait, what is skipped through?

The N-Best List

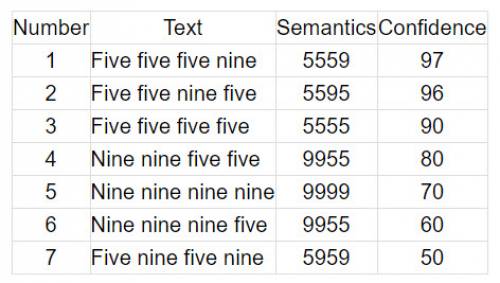

When a speech recognizer performs recognition, the developer can ask that it return not just the most likely thing ‘heard’, but the top two, or three, or N things. This returned data structure is called the N-Best list, and it contains, among other things, the literal text of each recognition hypothesis, the semantic tag, and the confidence score.

Here’s an example of an N-best list for the example above, returned after line 6:

Using N-Best Skip-Lists Together

The revised algorithm for processing after line 6 in our example might go something like:

- For each to-be-skipped item in the skip-list:

- For each recognition result in the n-best list:

- If the to-be-skipped item matches the recognition result:

- Don’t consider it

This algorithm could result in the following dialog, this time with two items on the skip list (which is why it is a list):

- System: Please say your PIN.

- Caller: Five, five, five, five.

- System: OK, I heard ‘five, five, five, nine.’ Is that right?

- Caller: No.

- System: My mistake. Let’s try that again. Please say or enter your PIN.

- Caller: Five five five five.

- System: OK, I heard ‘five five nine five.’ Is that right?

- Caller: No.

- System: I’m really sorry. Let’s try one more time. What’s your PIN?

- Caller: five five five five.

- System: five five five five?

- Caller: Yes!

- System: Thank goodness.

Alternative methods are, of course, possible. One suggestion is that if the difference in scores between the first and second most likely recognition candidates is small, perhaps the system should not re-prompt for a completely new Caller response, but instead offer the second candidate of the previous recognition, as in:

System: Please say your PIN. Caller: Five, five, five, five. System: OK, I heard ‘five, five, five, nine.’ Is that right? Caller: No. System: I see. Did you say ‘five five nine five.’ ? Caller: No. System: I’m really sorry. Let’s try again. What’s your PIN? Caller: five five five five. System: five five five five? Caller: Yes! System: Thank goodness.

To make this work, however, requires a fine understanding of your engine’s confidence scoring. In addition, the first collected utterance might have been noisy, or misarticulated, so the strategy would have probably failed anyway. In this case, informing the caller of recognition difficulty and re-collecting their audio might be more successful.

Useful Applications

Most real world applications require a user to claim an ID, usually with an account number. Really thoughtful applications can take advantage of a caller’s calling number (ANI), but sometimes, either because the ANI was missing, or wrong, or the user was calling from a different, perhaps public, phone, or they were calling from behind a PBX, identification with ANI fails and you still need to ID the caller. Or more specifically, the caller makes a claimed ID, and the system needs to verify the claim, and then often verify the claim.

Imagine a situation where a business has a ten-digit account number for each customer. The set of all account number possibilities is ten to the tenth power, or ten trillion (account numbers from 0,000,000,000 to 9,999,999,999 = 10,000,000,000 possible numbers). Speech geeks measure the complexity of a grammar by its entropy, or the size of things it can recognize.

The simplest grammar only has two things to recognize – say ‘yes’ or ‘no’. The chance of getting this wrong if you simply guess is 50-50. A ten digit account number grammar is much harder – the chance of getting it wrong, if you simply guess, is 9,999,999,999/10,000,000,000, which is pretty bad odds. Now no company actually has ten trillion accounts (that I know of). Heck, the population of the planet it is only in the tens of billions. How can we improve the odds?

One strategy is to build a grammar that only contains the actual account numbers. This can be done, but it runs into a number of difficulties. First, it requires a lot of work, on an ongoing basis – each time a caller calls in, the set of all actual account numbers needs to be collected and pushed into a grammar. This takes time and processing power. Second, and more importantly, what happens if the number the caller gives you is not an actual account number? The grammar will never recognize it, because it is not ‘covered’ by the grammar. That is, the recognition result will either be empty (no recognition) or it will be incorrect, that is, it is not what the user said. Misrecognition is the absolute bane of any speech application, as it quickly deteriorates into a death-spiral of confusion.

So if you can’t build a grammar of the actual account numbers, what’s a VUID to do? Use an N-best list process with the huge, any ten-digit grammar, but filter the result against a database of the actual account numbers. The grammar generation is easy – it’s any ten digit number. Chances are, the user will speak a real account number, and if that number is in the database, the answer should be found. The chance that the system will hallucinate a different actual number is probably pretty low.

The processing is a bit more complex than the previous example:

For each to-be-skipped account number in the skip-list: For each recognition result in the n-best list: If the to-be-skipped item matches the recognition result: Take it off the n-best list Result = most likely item from (skip-list filtered) n-best list For each account number in the new (skip-list filtered) n-best list: Check the account number database to see if the reco result is an actual account number If it is an actual account number: Result = account number

At the end of this processing, either there has been a successful recognition, or not, and if there was, either an actual account number has been found, or not.

This process can be shortened if you have the ability to write a custom webservice or other access to the back end, so that this logic can be moved to a business logic layer rather than being done in the VUI layer. For example, a hypothetical webservice 'checkNBest' could take the top 5 or top 10 entries in the n-best list and return only the valid choices. If the caller rejects the first one then the skip list would come into play.

Areas of Potential Usage

Using an n-best strategy is particularly useful where the grammar needs to be larger than the actual set of acceptable data. Consider, for example, air travel booking – the set of possible cities is much larger than the set of cities that actually have airports. The set of actual credit card accounts is much smaller than the set of possible credit card accounts. What about dates? If a user says ‘September 31st’ this is not a possible day, but should it be in a grammar? To push this a little farther, what about ‘Wednesday, September 15, 2005’?

Drawbacks

There are a couple of potential drawbacks to using a skip-list. The first is that it is possible to incorrectly put something on the skip-list. This could happen either due to a recognizer mistake (get the confirmation response wrong), or a user mistake (just plain answer the question wrong). Both happen. And it leaves no way to recover.

The second is simply time and money. It take extra development effort to implement. Thus it's probably not warranted at every single menu or input.

In a discussion group at SpeechTek 2013, a potential problem with a simple skip list was raised. Sometimes callers will reject what they actually wanted, and if that gets put into a skip list, then the application will no longer present that recognition result to the user. A possible solution to this is to modify the skip list programming so rather than absolutely preventing an item in the skip list from being reused, the item(s) in the skip list are penalized to some extent. Thus, if the acoustic evidence is just overwhelming, an item in the skip list might be offered to the user again. If, however, the item is in a skip list and just barely beats the next item in the n-best list, that penalty will knock it down in the list. The exact amount of the penalty can be tuned to minimize, but probably not completely eliminate, presentation errors. This is a more complex method, but it addresses the complexity of the real world – a world in which callers will be angry if an application seems to keep recognizing something even though they have rejected it, but in which some callers will accidentally reject something they actually wanted.

Conclusion

Using N-Best Skip-Lists should be a standard VUID best-practice employed in most speech applications. Every design should be evaluated for potential usage. Experience has shown that the extra effort goes a long way in making applications more usable and successful.

Further Reading

Skip Lists

Advanced confirmation and error correction with confidence levels and n-best lists

References

Huang, X., Acero, A., & Hon, H. (2001). Spoken language processing: A guide to theory, algorithm and system development. Upper Saddle River, NJ: Prentice Hall. (See pp. 664-666.)

Ostendorf, M., Kannan, A., Austin, S., Kimball, O., Schwartz, R., & Rohlicek, J. R. (1991). Integration of diverse recognition methodologies through reevaluation of n-best sentence hypotheses. In Proceedings of DARPA Workshop on Speech and Natural Language (pp. 83-87). Stroudsburg, PA: Association for Computational Linguistics.